International Center for Quantum-field Measurement Systems for Studies of the Universe and Particle (WPI-QUP) ,

Institute of Particle and Nuclear Studies (IPNS),

High Energy Accelerator Research Organization(KEK)

Executive summary

Question

Can a highly specialized large‑language model (LLM) built with only eight billion parameters match the performance of vastly larger and more expensive general‑purpose AIs such as GPT‑4o on cosmology tasks?

Findings

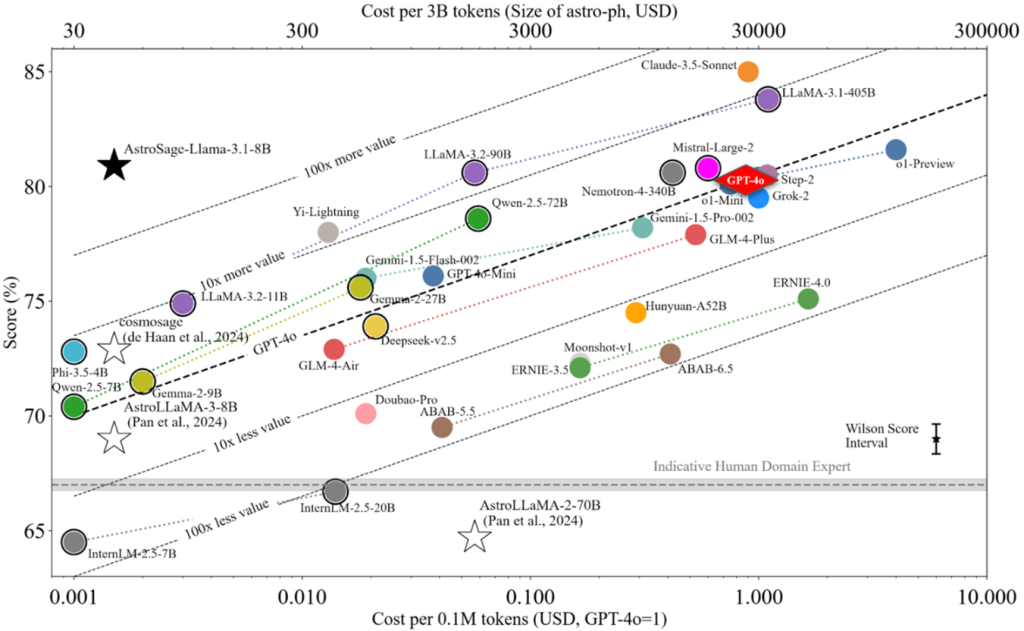

Led by Prof. Tijmen de Haan of IPNS/WPI‑QUP at KEK, the large language model “AstroSage‑8B” was developed: an AI assistant trained on 250,000 paper preprints in astronomy, astrophysics, cosmology, and astronomical instrumentation. On the 4,425‑question AstroMLab‑1 benchmark the model gets 80.9% of the answers right, exceeding the performance of OpenAI’s GPT‑4o while operating at roughly one thousandth the cost.

Meaning

The study proves that given carefully curated data on a specific subject, the combination of continued pre‑training, supervised fine‑tuning, and model merging can yield a small, open‑weight model that outperforms very large models. Not only does this lower the barrier for academic institutions with modest budgets to deploy powerful AI, it also paves the way for the development of autonomous research tools.

Overview

Can a smaller, highly specialized AI match or exceed the performance of giant, general-purpose AIs, and do so at a lower cost? That is the question Prof. Tijmen de Haan and his collaborators set out to answer. AstroSage‑8B answers this question with a resounding “yes.” Most headline‑grabbing AIs contain hundreds of billions to trillions of numerical “weights” and cost a fortune to train and operate. Working from KEK’s QUP and IPNS institutes, de Haan taught an 8‑billion‑parameter model—less than one hundredth the size of GPT‑4o—to understand and reason in the domains of astronomy, astrophysics, astroparticle physics, cosmology, space science, and astronomical instrumentation (hereafter shortened to “astronomy”). These findings, demonstrating the power of specialized AI, were published on Apr 21st, 2025 in Scientific Reports.

Please refer to the press release for details.

Contacts

High Energy Accelerator Research Organization (KEK) Public Relations Office

e-mail: press@kek.jp